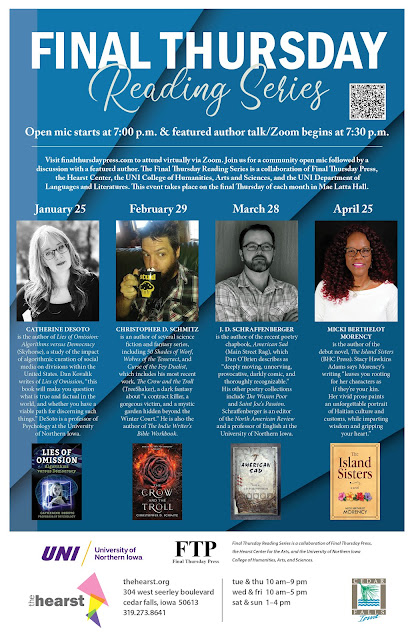

2024’s first Final Thursday Reading Series featured reader is Catherine DeSoto. DeSoto is the author of Lies of Omission: Algorithms versus Democracy (Skyhorse), a study of the impact of algorithmic curation of social media on divisions within the United States. Dan Kovalik writes of Lies of Omission, “this book will make you question what is true and factual in the world, and whether you have a viable path for discerning such things.” DeSoto is a professor of Psychology at the University of Northern Iowa.

The Final Thursday Reading Series takes place on January 25 at the Hearst Center for the Arts in Cedar Falls, Iowa. There will be an open mic at 7:00 p.m. (bring your best five minutes of original creative writing). Catherine DeSoto takes the stage at 7:30. The featured reading will also be simulcast on Zoom. Click HERE to register for a link.

Interview conducted by Jim O’Loughlin

JIM O’LOUGHLIN: While there has been a lot of media attention focused on the impact of social media on individual behavior, you approach this issue as a psychologist. How has that allowed you to view this issue differently?

CATHERINE DESOTO: My background in neuroscience and psychology allows me to characterize what is happening in the brain when one receives certain kinds of information, and then link this to social psychology research on preferring agreement over disagreement. In all, this makes our little pocket gadgets, and the way they work with the background algorithm, the perfect storm for increasing polarization. There is actually a lot of relevant research and knowledge that explains why society is splitting. Basically, human beings have a powerful innate love to be right; it is hardwired in the brain, and this allows us to understand the addictive nature of modern social media.

JO: Lies of Omission details some of the information gaps that exist in how social media presents information on controversial topics. Can you give an example of how algorithms feed people with different views different versions of the world?

CD: The divergent media feeding really began 15-20 years ago. By 2016 all major feeds were changing content based on what articles the user had been clicking and pausing upon. The book goes into detail, but for example, Neighbor A will opens her phone and see an article vividly describing the details of immigrant who committed a horrible crime, while Neighbor B opens her phone and is provided articles depicting a mother fleeing violence along with pictures of her young child with braids and a doll in her hand, stuck camping on the US border for nine months. Views on immigration problems will further diverge. Specific research on the algorithms' effects will be overviewed in my talk, and is well detailed in the book.

JO: While you are concerned about the effect of social media on individuals, the subtitle of this book—"Algorithms versus Democracy"— also points to your concerns of the political impact of these developments. What can be done to stop the corrosive impact on our politics?

CD: I wish I had a good answer. I like to hope that increased insight and awareness might help in some small way.

JO: In writing this book, what were the pleasures and challenges in taking scientific data and presenting it for an audience that may include specialists as well as general readers?

CD: Very hard to do; and I am sure I failed to strike the right balance at times. For the second part, I earnestly sought to give a strong and accurate overview of what a person who holds the opposing view might say and focus on. I hope that it is hard to tell my true view after reading the pros and cons of a topic. If that happens, I feel I succeeded. That is what I was going for.

JO: How, if at all, has writing this book affected your own use of social media? Do you do anything differently after spending so much time on this subject?

CD: Yes, actually. Like everyone I do not want to have holes in my knowledge about issues I care about. Often, I try to look for specific content by name, and not let the media feeds (Facebook, Youtube, my News feed) select articles for me. I am aware that what is served to me is algorithm driven and will automatically work to keep some information from me, as well as buffer me from opposing information. I don't want to let that happen, or at least I wish to try to limit it. Another thing I do: I try to click on and pause on articles I do not agree with, even if I don't read them.... I do this to try and keep my feed from being too catered to my own viewpoints.